Authors: André Kilchenmann, Lukas Rosenthaler

Category: Paper:Poster

Keywords: annotation, moving image, transcription, multimedia platform

Moving Images and the Connection to other Media Types

In film and media studies, there has always been a desire to annotate and analyze movies as easily as still images. Video is an interesting research object in historical and ethnographic research. The recordings then need to be transcribed. This could be a simple interview transcription, but in disciplines like sociology, or film and media studies, it can be more complicated. In this case, the scholar would also like to annotate the source, to describe the composition of the image, the soundtrack, or the movement of the camera. The question was always: how can we watch and describe a moving image at the same time?

The moving image has a continuous linearity and makes only sense in a dynamic state. So this medium is difficult to grab and the researcher is not able to write notes and commentaries direct to it. In contrast to an image, a moving image is always bound to technical devices [1]. This fact does not make it easier to annotate them. In the digital world today moving image research is better to do as before, because we can work with only one technical device now – namely the computer. This step is comfortable, but without a corresponding software awkward to do. If the researcher works with moving images, he needs software for the video file and software for the text processing. He has to switch at least between two (proprietary) programs.

At our lab we’re developing a purely web-based virtual research environment (VRE) – a system for annotation and linkage of sources in arts and humanities (SALSAH). The project originated from an art historical research project about early prints in Basel (Incunabula Basiliensia). It allows for the collaborative annotation and linking of digitized sources [2] or to define and linking special regions inside the source (e.g. region of interest on a picture).

In the recent years the project grew enormously. Different projects from art history, history, media and cultural studies are using the SALSAH platform. They’re all working with different kind of sources and meta data information. It doesn’t matter which kind of data we’re working with, because we’re implemented a semantic graph database in the back-end; a triple store service based on the semantic web idea RDF (resource description framework). This data storage and long term preservation service is called KnORA: Knowledge Organization, Representation, and Annotation [3].

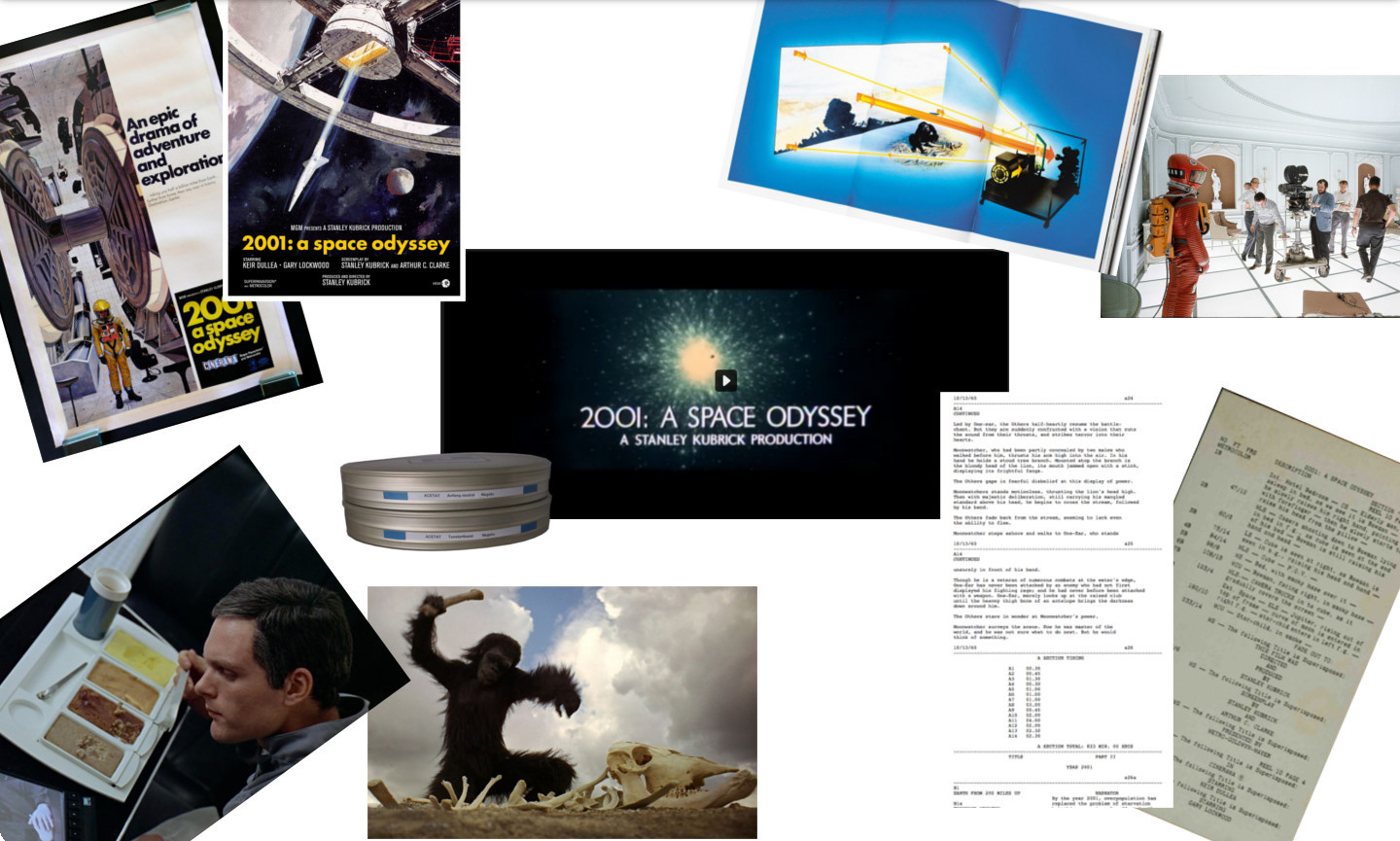

With the splitting of SALSAH into two parts (back-end and front-end) we have a properly RESTful API on the one hand and the possibility of various front-ends on the other side. The main page for the researchers remains SALSAH [4]. The idea is still the annotation and linkage of any kind of media. One module is the moving image transcription tool. It should be possible to bring images, text and audiovisual sources together as shown in figure 1.

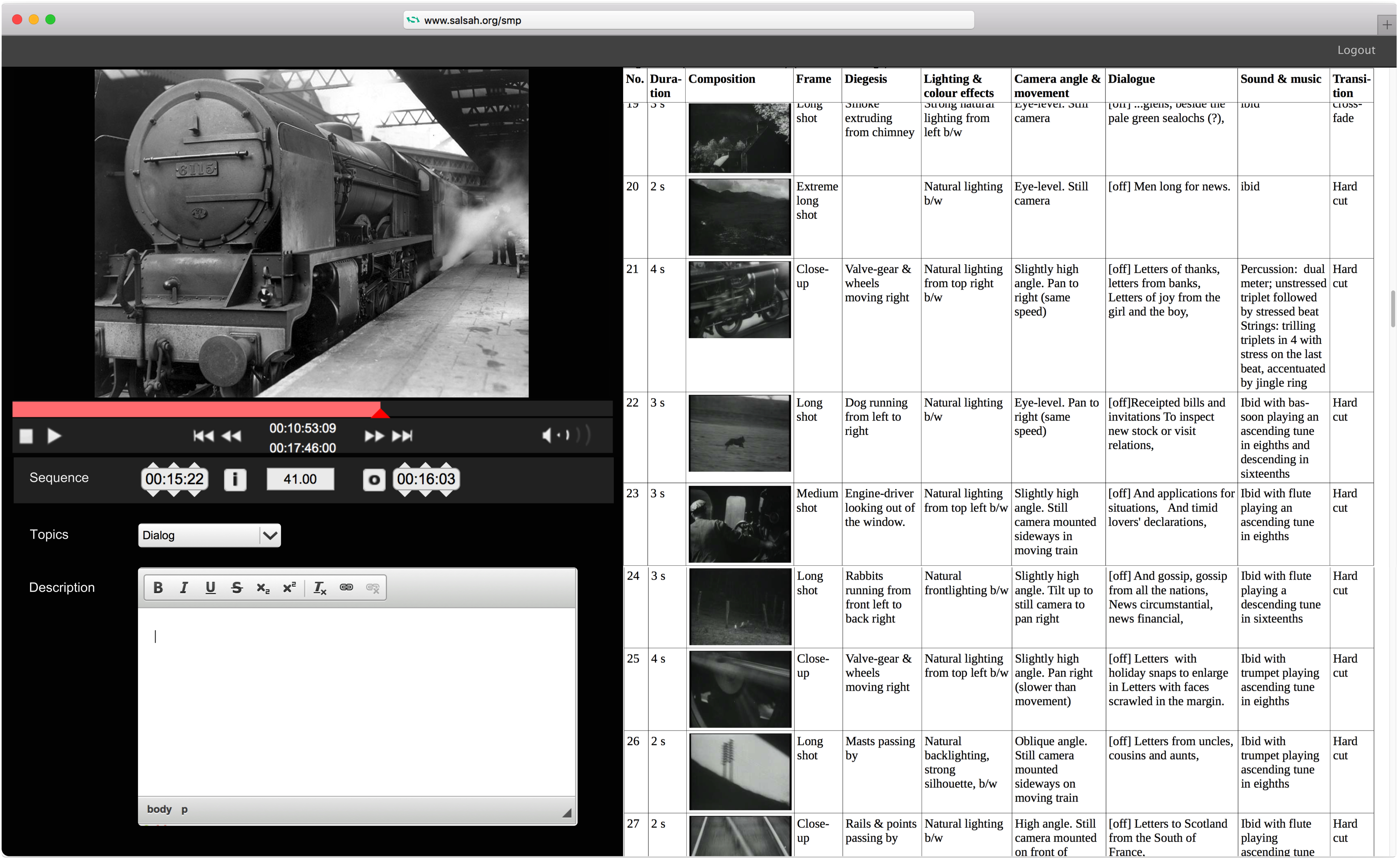

For a deep moving image analysis we want to have the possibility to connect a movie or just a sequence of it with related objects like screenplay, film stills or making-of...-descriptions. The connection of the movie with these additional objects and their own metadata information, enables a powerful (re)search possi- bility on the main moving image. The moving image module in SALSAH is not a standalone solution like other video analysis tools. The network behind every movie would be visible. Another difference with conventional video transcription tools is the representation of the transcription. Especially in film and media stud- ies the researcher has to describe different aspects in the movie: camera position, sound, actors, text etc. [5, 6] The result is a table based sequence protocol, as shown in figure 2 on the right hand side.

The moving image is the main object in the new module. The film analyst can describe and annotate every scene with a simple transcription tool at the bottom of the SALSAH movie player (SMP). On the right hand side we can see the result of the transcription: the sequence protocol. The figure is showing just a simple example. The researcher can define the columns of the protocol by himself. Through the RDF triple store in KnORA it is possible to have the metadata depending on the research question. At the end it should be possible to export this table based sequence protocol as shown or like a subtitle file to use it with other media players.